Blog

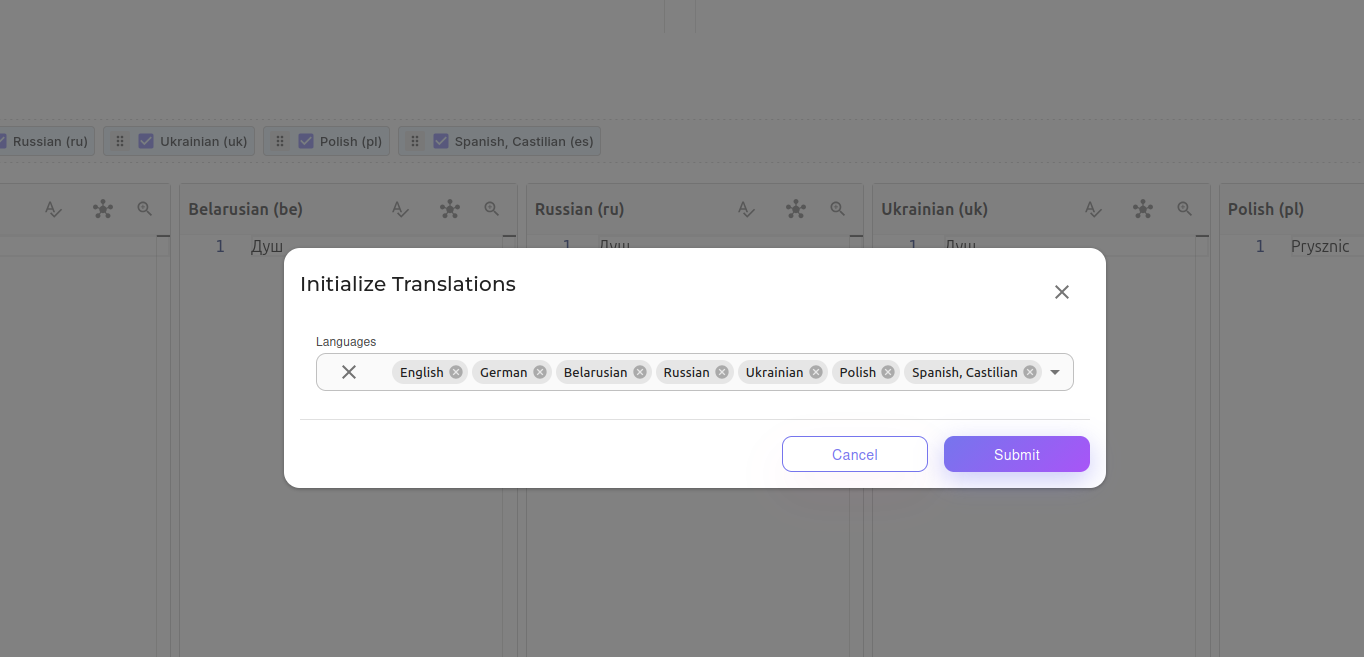

Insights on AI, development, and modern technologies

.jpg?alt=media&token=fa69b8b3-19be-4147-9583-843a5bdcffcb)

What you'll learn: How to build AI agent systems with LangGraph - from basic concepts to working code. We'll create an article-writing pipeline with multiple AI agents that collaborate, review each other's work, and iterate until the result is perfect.

PART 1: Basic Concepts

What is a Graph?

Before diving into LangGraph, it's essential to understand what a graph is in programming.

A Graph is a Data Structure

Imagine a subway map:

- Stations are the nodes

- Lines between stations are the edges

text1 Typical Graph: Subway Map (Analogy): 2 3 [A] [Victory Square] 4 │ │ 5 ▼ ▼ 6 [B]───────►[C] [October]───►[Kupala] 7 │ │ 8 ▼ ▼ 9 [D] [Cultural Institute]

In LangGraph:

- Nodes are functions (agents) that perform tasks.

- Edges are rules indicating the order in which tasks are executed.

Why is LangGraph Needed?

Problem: Traditional AI Programs are Linear

text1Typical Chain: 2 3 Question → LLM → Answer 4 5 or 6 7 Question → Tool 1 → LLM → Tool 2 → Answer

This works for simple tasks, but what if:

- You need to go back and redo something?

- You need to verify the result and possibly repeat?

- You need multiple agents with different roles?

Solution: LangGraph Allows Creating Cycles

text1LangGraph (Graph): 2 3 ┌──────────────────────┐ 4 │ │ 5 ▼ │ 6 Question → [Researcher] → [Writer] → [Reviewer] 7 │ 8 ▼ 9 Good? ───NO───► back to Writer 10 │ 11 YES 12 │ 13 ▼ 14 [Result]

What is "state"?

State is the memory of the program.

Imagine you are writing an article with a team:

- The researcher gathered materials → needs to write them down somewhere.

- The writer created a draft → needs to pass it to the reviewer.

- The reviewer wrote comments → needs to return them to the writer.

State is like a "basket" where everyone puts their results and from which they take data.

text1┌─────────────────────────────────────────────────────────────┐ 2│ STATE (Стан) │ 3├─────────────────────────────────────────────────────────────┤ 4│ │ 5│ topic: "What I Write" │ 6│ research: "Materials from the Researcher" │ 7│ draft: "Draft from the Writer" │ 8│ review: "Comments from the Reviewer" │ 9│ finalArticle: "Final Article" │ 10│ messages: [history of all messages] │ 11│ │ 12└─────────────────────────────────────────────────────────────┘ 13 ▲ ▲ ▲ 14 │ │ │ 15 Researcher Writer Reviewer 16 (reads and writes) (reads and writes) (reads and writes)

PART 2: Annotation - How State is Created

What is Annotation?

Annotation is a way of describing the structure of a state. It is like creating a form with fields.

Analogy: Survey

Imagine you are creating a survey:

text1┌─────────────────────────────────────────┐ 2│ EMPLOYEE SURVEY │ 3├─────────────────────────────────────────┤ 4│ Name: ___________________ │ 5│ Age: _____ │ 6│ Work Experience (years): _____ │ 7│ Skills List: ___, ___, ___ │ 8└─────────────────────────────────────────┘

In code, it looks like this:

typescript1// ANALOGY: Creating a survey form 2const Survey = { 3 name: '', // Text field 4 age: 0, // Number 5 experience: 0, // Number 6 skills: [], // List 7};

In LangGraph: Annotation.Root

In LangGraph, state is created using Annotation.Root(). This is a special function that describes the structure of your data - what fields exist and how they should be updated.

typescript1import { Annotation } from '@langchain/langgraph'; 2 3const ResearchState = Annotation.Root({ 4 // Each field is described through Annotation<Type> 5 topic: Annotation<string>({...}), 6 research: Annotation<string>({...}), 7 draft: Annotation<string>({...}), 8 // etc. 9});

Each field inside Annotation.Root has two important properties: reducer (how to update the value) and default (initial value). We'll explore these next.

What is a Reducer?

Problem: How to merge data?

What should you do when multiple agents write to the same field?

text1Agent 1 writes: topic = "Topic A" 2Agent 2 writes: topic = "Topic B" 3 4What should be in topic? "Topic A"? "Topic B"? "Topic A + Topic B"?

Solution: Reducer - a function that resolves

Reducer is a function that takes:

- Current value (what is already there)

- New Value (what comes in)

It returns: result (what to keep)

typescript1reducer: (current, update) => result; 2// ▲ ▲ ▲ 3// │ │ └── What will be kept 4// │ └── New value 5// └── Current value

Examples of Reducers:

1. Reducer "REPLACE"

The new value completely replaces the old one.

typescript1// Code: 2reducer: (current, update) => update; 3 4// Example: 5// Current: "Theme A" 6// New: "Theme B" 7// Result: "Theme B" ← new replaces old

Analogy: You overwrite a file - the new content replaces the old one.

text1file.txt 2────────────── 3Was: "Old text" 4 ▼ (overwrite) 5Is: "New text"

2. Reducer "APPEND"

New elements are added to the existing ones.

typescript1// Code: 2reducer: (current, update) => [...current, ...update]; 3 4// Example: 5// Current: ["Message 1", "Message 2"] 6// New: ["Message 3"] 7// Result: ["Message 1", "Message 2", "Message 3"]

Analogy: You add new entries to a diary - the old ones remain.

text1diary.txt 2────────────── 3Was: "Monday: did A" 4 "Tuesday: did B" 5 ▼ (adding) 6Became: "Monday: did A" 7 "Tuesday: did B" 8 "Wednesday: did C" ← added

Full example of the state:

typescript1const ResearchState = Annotation.Root({ 2 // ═══════════════════════════════════════════════════════ 3 // FIELD: messages (messages) 4 // ═══════════════════════════════════════════════════════ 5 messages: Annotation<BaseMessage[]>({ 6 // REDUCER: Add new messages to existing ones 7 reducer: (current, update) => [...current, ...update], 8 // DEFAULT: Initial value - empty array 9 default: () => [], 10 }), 11 // 12 // How it works: 13 // 1. Beginning: messages = [] 14 // 2. Researcher: messages = [] + [AIMessage] = [AIMessage] 15 // 3. Writer: messages = [AIMessage] + [AIMessage] = [AIMessage, AIMessage] 16 // 4. And so on - all messages are stored 17 18 // ═══════════════════════════════════════════════════════ 19 // FIELD: topic (topic) 20 // ═══════════════════════════════════════════════════════ 21 topic: Annotation<string>({ 22 // REDUCER: Replace old value with new one 23 reducer: (_, update) => update, // "_" means "ignore" 24 default: () => '', 25 }), 26 // 27 // How it works: 28 // 1. Beginning: topic = "" 29 // 2. User: topic = "" → "LangChain" 30 // If someone else writes in topic - the old value will disappear 31 32 // ═══════════════════════════════════════════════════════ 33 // FIELD: iterationCount (iteration counter) 34 // ═══════════════════════════════════════════════════════ 35 iterationCount: Annotation<number>({ 36 reducer: (_, update) => update, // Simply replace 37 default: () => 0, // Start from 0 38 }), 39 // 40 // The writer writes each time: iterationCount: state.iterationCount + 1 41 // 1. Start: iterationCount = 0 42 // 2. Writer (1): iterationCount = 0 + 1 = 1 43 // 3. Writer (2): iterationCount = 1 + 1 = 2 44 // 4. Writer (3): iterationCount = 2 + 1 = 3 45});

Visualization of Reducers:

text1┌─────────────────────────────────────────────────────────────────┐ 2│ REDUCER: REPLACE │ 3├─────────────────────────────────────────────────────────────────┤ 4│ │ 5│ Current: █████████████ "Old text" │ 6│ ▼ │ 7│ New: ░░░░░░░░░░░░░ "New text" │ 8│ ▼ │ 9│ Result: ░░░░░░░░░░░░░ "New text" ← only new │ 10│ │ 11└─────────────────────────────────────────────────────────────────┘ 12 13┌─────────────────────────────────────────────────────────────────┐ 14│ REDUCER: ADD │ 15├─────────────────────────────────────────────────────────────────┤ 16│ │ 17│ Current: [█] [█] [█] ← three elements │ 18│ + │ 19│ New: [░] [░] ← two new │ 20│ = │ 21│ Result: [█] [█] [█] [░] [░] ← all together │ 22│ │ 23└─────────────────────────────────────────────────────────────────┘

What is default?

default is a function that returns the initial value of a field.

typescript1default: () => value

Why a function and not just a value?

This is a common JavaScript pitfall. If you use a plain object or array as default, all instances will share the same reference - changes in one place will affect all others!

typescript1// POOR: If this is an object or array 2default: [] // All instances will refer to the same array! 3 4// GOOD: The function creates a new array each time 5default: () => [] // Each instance will get its own array

Examples:

typescript1// For a string 2default: () => '' // Empty string 3 4// For a number 5default: () => 0 // Zero 6 7// For an array 8default: () => [] // Empty array 9 10// For an object 11default: () => ({}) // Empty object 12 13// For boolean 14default: () => false // false

PART 3: Nodes - Agents

What is a node?

Node is a function that:

- Receives the full state

- Performs some work (for example, calls LLM)

- Returns a partial state update

Analogy: Worker on the Assembly Line

text1┌─────────────────────────────────────────────────────────────┐ 2│ ASSEMBLY LINE │ 3├─────────────────────────────────────────────────────────────┤ 4│ │ 5│ [Box] ──► [Worker 1] ───────► [Worker 2] ──────► │ 6│ │ │ │ 7│ ▼ ▼ │ 8│ Adds part A Adds part B │ 9│ │ 10└─────────────────────────────────────────────────────────────┘ 11 12Each worker: 131. Sees what has already been done (state) 142. Does their part of the work 153. Passes it on with additions

Node Structure in Code:

typescript1async function myNode( 2 state: ResearchStateType // ← INPUT: Full state 3): Promise<Partial<ResearchStateType>> { 4 // ← OUTPUT: Partial update 5 // 1. Read data from state 6 const data = state.someField; 7 8 // 2. Do the work 9 const result = await doSomething(data); 10 11 // 3. Returning the update (only what has changed) 12 return { 13 someField: result, 14 }; 15}

Important: Partial<State>

A node doesn't need to return the entire state - only the fields that changed. LangGraph will merge your partial update with the existing state using the reducers you defined.

typescript1// The full state has 6 fields: 2state = { 3 topic: "...", 4 research: "...", 5 draft: "...", 6 review: "...", 7 finalArticle: "...", 8 messages: [...], 9 iterationCount: 0 10} 11 12// But the node can only return what it has changed: 13return { 14 draft: "New draft", // Changed 15 messages: [new AIMessage("...")], // Added 16 // The other fields are not mentioned - they will remain as they were 17}

Our nodes in detail

Now let's look at the four nodes in our article-writing system. Each node has a specific role and passes its results to the next one through the shared state.

Node 1: Researcher (researcherNode)

The researcher is the first agent in our pipeline. It takes the topic and generates research materials that will be used by the writer.

typescript1async function researcherNode( 2 state: ResearchStateType 3): Promise<Partial<ResearchStateType>> { 4 // ┌─────────────────────────────────────────────────────────────┐ 5 // │ STEP 1: Get the topic for research │ 6 // └─────────────────────────────────────────────────────────────┘ 7 const topic = 8 state.topic || 9 String(state.messages[state.messages.length - 1]?.content) || 10 ''; 11 // ▲ ▲ 12 // │ └── Or the last message 13 // └── First, try to take topic 14 15 // ┌─────────────────────────────────────────────────────────────┐ 16 // │ STEP 2: Form the prompt for LLM │ 17 // └─────────────────────────────────────────────────────────────┘ 18 const prompt = `You are an expert researcher. Your task is to gather key information. 19 Topic: ${topic} 20 Conduct a brief research...`; 21 22 // ┌─────────────────────────────────────────────────────────────┐ 23 // │ STEP 3: Call LLM │ 24 // └─────────────────────────────────────────────────────────────┘ 25 const response = await model.invoke([ 26 { role: 'system', content: prompt }, // Instructions for AI 27 { role: 'user', content: `Research the topic: ${topic}` }, // Request 28 ]); 29 30 const research = String(response.content); // Result from LLM 31 32 // ┌─────────────────────────────────────────────────────────────┐ 33 // │ STEP 4: Return state update │ 34 // └─────────────────────────────────────────────────────────────┘ 35 return { 36 research, // Store the research result 37 messages: [ 38 new AIMessage({ 39 content: `[Research completed] 40${research}`, 41 }), 42 ], 43 // ▲ Add a message to the history 44 }; 45}

What happens:

text1INPUT (state): OUTPUT (update): 2┌─────────────────────┐ ┌─────────────────────┐ 3│ topic: "LangChain" │ │ research: "LangCh.. │ 4│ research: "" │ ──────► │ messages: [+1 msg] │ 5│ draft: "" │ └─────────────────────┘ 6│ messages: [1 msg] │ 7└─────────────────────┘ 8 ▼ After merging: 9 10 ┌─────────────────────┐ 11 │ topic: "LangChain" │ 12 │ research: "LangCh...│ ← updated 13 │ draft: "" │ 14 │ messages: [2 msgs] │ ← added 15 └─────────────────────┘

Node 2: Writer (writerNode)

The writer takes the research and creates an article draft. If this is a revision (after reviewer feedback), it also considers the review comments. Notice how iterationCount helps us track how many times the article has been rewritten.

typescript1async function writerNode( 2 state: ResearchStateType 3): Promise<Partial<ResearchStateType>> { 4 // ┌─────────────────────────────────────────────────────────────┐ 5 // │ STEP 1: Read the research and possible review │ 6 // └─────────────────────────────────────────────────────────────┘ 7 const prompt = `You are a technical writer. Based on the research, write an article. 8 9Research: 10${state.research} 11 12${state.review ? `Previous review (consider comments): ${state.review}` : ''} 13`; 14 15 // ┌─────────────────────────────────────────────────────────────┐ 16 // │ STEP 2: Call LLM │ 17 // └─────────────────────────────────────────────────────────────┘ 18 const response = await model.invoke([ 19 { role: 'system', content: prompt }, 20 { role: 'user', content: 'Write an article based on the research' }, 21 ]); 22 23 const draft = String(response.content); 24 25 // ┌─────────────────────────────────────────────────────────────┐ 26 // │ STEP 3: Return the update │ 27 // └─────────────────────────────────────────────────────────────┘ 28 return { 29 draft, // Draft of the article 30 iterationCount: state.iterationCount + 1, // Increment the counter 31 messages: [ 32 new AIMessage({ content: `[Draft ${state.iterationCount + 1}]` }), 33 ], 34 }; 35}

What happens on repeat call:

text1FIRST CALL: SECOND CALL (after review): 2┌─────────────────────┐ ┌─────────────────────┐ 3│ research: "..." │ │ research: "..." │ 4│ review: "" │ │ review: "Refine..." │ ← there are comments! 5│ iterationCount: 0 │ │ iterationCount: 1 │ 6└─────────────────────┘ └─────────────────────┘ 7 │ │ 8 ▼ ▼ 9Writing without comments Considering comments 10 │ │ 11 ▼ ▼ 12┌─────────────────────┐ ┌─────────────────────┐ 13│ draft: "Version 1" │ │ draft: "Version 2" │ 14│ iterationCount: 1 │ │ iterationCount: 2 │ 15└─────────────────────┘ └─────────────────────┘

Node 3: Reviewer (reviewerNode)

The reviewer evaluates the draft and decides if it's ready for publication. The key here is the output: if the review contains "APPROVED", the article moves to finalization. Otherwise, it goes back to the writer for improvements. This is what enables the cycle in our graph.

typescript1async function reviewerNode(state: ResearchStateType): Promise<Partial<ResearchStateType>> { 2 3 // ┌─────────────────────────────────────────────────────────────┐ 4 // │ STEP 1: Formulating the review request │ 5 // └─────────────────────────────────────────────────────────────┘ 6 const prompt = `You are a strict editor. Evaluate the article. 7 8Article: 9${state.draft} 10 11Evaluate based on the criteria: 121. Accuracy of information 132. Structure and logic 143. Language quality 154. Completeness of the topic coverage 16 17If the article is good - say "APPROVED". When improvements are needed, please provide specific recommendations. 18`; 19 20 // ┌─────────────────────────────────────────────────────────────┐ 21 // │ STEP 2: Get the review │ 22 // └─────────────────────────────────────────────────────────────┘ 23 const response = await model.invoke([...]); 24 const review = String(response.content); 25 26 // ┌─────────────────────────────────────────────────────────────┐ 27 // │ STEP 3: Return the review │ 28 // └─────────────────────────────────────────────────────────────┘ 29 return { 30 review, // Review (either "APPROVED" or comments) 31 messages: [new AIMessage({ content: `[Review]\n${review}` })], 32 }; 33}

Two possible outcomes:

text1OPTION A: The article is good OPTION B: Needs improvement 2┌───────────────────────────┐ ┌───────────────────────────┐ 3│ review: "APPROVED. │ │ review: "Improve: │ 4│ The article is excellent!"│ │ 1. Add examples │ 5│ │ │ 2. Clarify the terms" │ 6└───────────────────────────┘ └───────────────────────────┘ 7 │ │ 8 ▼ ▼ 9 Moving to Returning to 10 finalizer writer

Node 4: Finalizer (finalizerNode)

The finalizer is the simplest node - it just copies the approved draft to the finalArticle field, marking the end of our workflow. This is called when the reviewer approves the article.

typescript1async function finalizerNode( 2 state: ResearchStateType 3): Promise<Partial<ResearchStateType>> { 4 // Simple node - copies the draft to the final article 5 return { 6 finalArticle: state.draft, // Final article 7 messages: [new AIMessage({ content: `[READY]\n\n${state.draft}` })], 8 }; 9}

What happens:

text1INPUT: OUTPUT: 2┌─────────────────────┐ ┌─────────────────────┐ 3│ draft: "Ready..." │ ──────► │ finalArticle: │ 4│ finalArticle: "" │ │ "Ready..." │ 5└─────────────────────┘ └─────────────────────┘

PART 4: Edges

What is an edge?

Edge is a rule that states: "After this node, the following node is executed."

Analogy: Arrows on the Diagram

text1Recipe steps: 2 3 [Make dough] ────► [Add sauce] ────► [Add cheese] ────► [Bake] 4 │ │ │ │ 5 ▼ ▼ ▼ ▼ 6 TRANSITION TRANSITION TRANSITION END

Two Types of Transitions in LangGraph:

1. Simple Transitions (addEdge)

Always go to the same node.

typescript1workflow.addEdge('researcher', 'writer'); 2// ▲ ▲ 3// │ └── Where are we going 4// └── From where are we coming 5 6// Meaning: After researcher, we ALWAYS go to writer

Visualization:

text1 [researcher] ───────────────► [writer] 2 (always)

2. Conditional Transitions (addConditionalEdges)

The choice of the next node depends on the state.

typescript1workflow.addConditionalEdges( 2 'reviewer', // Source node 3 shouldContinue, // Function that decides where to go 4 { 5 writer: 'writer', // If the function returns 'writer' → go to writer 6 finalizer: 'finalizer', // If the function returns 'finalizer' → go to finalizer 7 } 8);

Visualization:

text1 ┌──────────► [writer] 2 │ 3 [reviewer] ─────► [?] ──┤ 4 │ 5 └──────────► [finalizer] 6 7 The function shouldContinue decides which arrow to take.

Detailed Function shouldContinue

This is the "brain" of our conditional edge. It examines the current state and decides where to go next. The function must return a string that matches one of the keys in the mapping object we defined in addConditionalEdges.

typescript1function shouldContinue(state: ResearchStateType): 'writer' | 'finalizer' { 2 // ▲ 3 // └── Returns one of these strings 4 5 const review = state.review.toLowerCase(); 6 const maxIterations = 3; 7 8 // ═══════════════════════════════════════════════════════════════ 9 // CONDITION 1: Reviewer said "APPROVED" 10 // ═══════════════════════════════════════════════════════════════ 11 if (review.includes('зацверджана') || review.includes('approved')) { 12 console.log('Article approved'); 13 return 'finalizer'; // ← Proceed to finalize 14 } 15 16 // ═══════════════════════════════════════════════════════════════ 17 // CONDITION 2: Too many attempts (protection against infinite loop) 18 // ═══════════════════════════════════════════════════════════════ 19 if (state.iterationCount >= maxIterations) { 20 console.log('Maximum iterations reached'); 21 return 'finalizer'; // ← Force finalize 22 } 23 24 // ═══════════════════════════════════════════════════════════════ 25 // OTHERWISE: Needs revision 26 // ═══════════════════════════════════════════════════════════════ 27 console.log('Revision needed'); 28 return 'writer'; // ← Go back to writer 29}

Decision flowchart:

text1 ┌───────────────────┐ 2 │ shouldContinue │ 3 │ (function) │ 4 └─────────┬─────────┘ 5 │ 6 ▼ 7 ┌──────────────────────────────┐ 8 │ Is "approved" present │ 9 │ in the review? │ 10 └──────────────┬───────────────┘ 11 │ 12 ┌────────────┴────────────┐ 13 │ │ 14 YES NO 15 │ │ 16 ▼ ▼ 17 ┌────────────┐ ┌──────────────────────────┐ 18 │ return │ │ Is iterationCount >= 3? │ 19 │'finalizer' │ └──────────────┬───────────┘ 20 │ │ │ 21 └────────────┘ ┌────────────┴────────────┐ 22 │ │ 23 YES NO 24 │ │ 25 ▼ ▼ 26 ┌────────────┐ ┌────────────┐ 27 │ return │ │ return │ 28 │'finalizer' │ │ 'writer' │ 29 │ │ │ │ 30 └────────────┘ └────────────┘

How the graph is built

Now let's put everything together! Building a graph in LangGraph follows a simple pattern: create the graph, add nodes, connect them with edges, and compile.

Sequence of creation:

typescript1// ═══════════════════════════════════════════════════════════════ 2// STEP 1: Create StateGraph with our state 3// ═══════════════════════════════════════════════════════════════ 4const workflow = new StateGraph(ResearchState) 5 6 // ═══════════════════════════════════════════════════════════ 7 // STEP 2: Add nodes (register functions) 8 // ═══════════════════════════════════════════════════════════ 9 .addNode('researcher', researcherNode) // Name 'researcher' → function 10 .addNode('writer', writerNode) // Name 'writer' → function 11 .addNode('reviewer', reviewerNode) // Name 'reviewer' → function 12 .addNode('finalizer', finalizerNode) // Name 'finalizer' → function 13 14 // ═══════════════════════════════════════════════════════════ 15 // STEP 3: Add simple transitions 16 // ═══════════════════════════════════════════════════════════ 17 .addEdge('__start__', 'researcher') // Start → Researcher 18 .addEdge('researcher', 'writer') // Researcher → Writer 19 .addEdge('writer', 'reviewer') // Writer → Reviewer 20 21 // ═══════════════════════════════════════════════════════════ 22 // STEP 4: Add conditional transition 23 // ═══════════════════════════════════════════════════════════ 24 .addConditionalEdges('reviewer', shouldContinue, { 25 writer: 'writer', // If 'writer' → back to writer 26 finalizer: 'finalizer', // If 'finalizer' → to finalizer 27 }) 28 29 // ═══════════════════════════════════════════════════════════ 30 // STEP 5: Add final transition 31 // ═══════════════════════════════════════════════════════════ 32 .addEdge('finalizer', '__end__'); // Finalizer → End

Special nodes:

text1┌───────────────┬─────────────────────────────────────────────┐ 2│ '__start__' │ Virtual start node │ 3│ │ LangGraph automatically starts from it │ 4├───────────────┼─────────────────────────────────────────────┤ 5│ '__end__' │ Virtual end node │ 6│ │ When reached - the graph is completed │ 7└───────────────┴─────────────────────────────────────────────┘

PART 5: Compilation and execution

We've defined our state, nodes, and edges. Now it's time to turn this blueprint into a running application!

What is compile()?

compile() is the process of transforming a graph description into an executable program. Until you call compile(), you just have a description of what you want to build - not an actual runnable system.

typescript1// Graph description (blueprint) 2const workflow = new StateGraph(ResearchState) 3 .addNode(...) 4 .addEdge(...); 5 6// Compilation into an executable program 7const app = workflow.compile(); 8// ▲ 9// └── Now this can be run!

Analogy: Recipe vs. Finished Dish

text1workflow (description) app (compiled program) 2────────────────────── ────────────────────── 3Recipe on paper Ready pizza 4 - How to make the dough (can be eaten) 5 - What to add 6 - How to bake 7 8Cannot be eaten! Can be eaten!

Checkpointer (MemorySaver)

What is it?

Checkpointer is a mechanism for saving state after each step.

typescript1import { MemorySaver } from '@langchain/langgraph'; 2 3const checkpointer = new MemorySaver(); 4 5const app = workflow.compile({ 6 checkpointer, // ← Adding checkpointer 7});

Why is this needed?

text1WITHOUT CHECKPOINTER: 2═════════════════════════════════════════════════════════════════ 3 4 Run 1: START → researcher → writer → ... → END 5 6 Run 2: START → researcher → ... (everything from the beginning!) 7 8 ✗ Cannot continue from the stopping point 9 ✗ Cannot view history 10 11 12WITH CHECKPOINTER: 13═════════════════════════════════════════════════════════════════ 14 15 Run 1: START → researcher → [SAVED] 16 │ 17 thread_id: "chat-123" 18 19 Run 2: [RESUMING] → writer → reviewer → ... 20 │ 21 thread_id: "chat-123" 22 23 ✓ Can continue from the stopping point 24 ✓ Can view history 25 ✓ Can have multiple independent "conversations"

Thread ID - identifier of the "thread"

typescript1const config = { 2 configurable: { 3 thread_id: 'article-1', // Unique ID for this "conversation" 4 }, 5}; 6 7// Each thread_id is a separate story 8// 'article-1' - about one article 9// 'article-2' - about another article 10// They do not overlap!

Running the Graph

Finally! Let's run our graph. The invoke() function takes initial state values and configuration, then executes the entire graph from start to finish.

The invoke() Function

typescript1const result = await app.invoke( 2 // Initial data for the state 3 { 4 topic: 'Benefits of LangChain', 5 messages: [new HumanMessage('Benefits of LangChain')], 6 }, 7 // Configuration 8 { 9 configurable: { 10 thread_id: 'article-1', 11 }, 12 } 13);

What Happens When invoke() is Called:

text1┌─────────────────────────────────────────────────────────────────┐ 2│ invoke() │ 3└───────────────────────────────┬─────────────────────────────────┘ 4 │ 5 ▼ 6┌─────────────────────────────────────────────────────────────────┐ 7│ 1. INITIALIZATION OF STATE │ 8│ state = { │ 9│ topic: "Advantages of LangChain", │ 10│ messages: [HumanMessage], │ 11│ research: "", ← default │ 12│ draft: "", ← default │ 13│ review: "", ← default │ 14│ finalArticle: "", ← default │ 15│ iterationCount: 0, ← default │ 16│ } │ 17└───────────────────────────────┬─────────────────────────────────┘ 18 │ 19 ▼ 20┌─────────────────────────────────────────────────────────────────┐ 21│ 2. BEGINNING: __start__ → researcher │ 22│ Executing researcherNode(state) │ 23│ → Receiving update {research: "...", messages: [...]} │ 24│ → Merging with state through reducers │ 25│ Saving checkpoint │ 26└───────────────────────────────┬─────────────────────────────────┘ 27 │ 28 ▼ 29┌─────────────────────────────────────────────────────────────────┐ 30│ 3. TRANSITION: researcher → writer │ 31│ Execute writerNode(state) │ 32│ → Receive update {draft: "...", iterationCount: 1} │ 33│ → Merge with state │ 34│ Save checkpoint │ 35└───────────────────────────────┬─────────────────────────────────┘ 36 │ 37 ▼ 38┌─────────────────────────────────────────────────────────────────┐ 39│ 4. TRANSITION: writer → reviewer │ 40│ Execute reviewerNode(state) │ 41│ → Receive update {review: "..."} │ 42│ → Merge with state │ 43│ Save checkpoint │ 44└───────────────────────────────┬─────────────────────────────────┘ 45 │ 46 ▼ 47┌─────────────────────────────────────────────────────────────────┐ 48│ 5. CONDITIONAL TRANSITION: shouldContinue(state) │ 49│ → Returns 'writer' or 'finalizer' │ 50│ → If 'writer' - return to step 3 │ 51│ → If 'finalizer' - proceed further │ 52└───────────────────────────────┬─────────────────────────────────┘ 53 │ (if 'finalizer') 54 ▼ 55┌─────────────────────────────────────────────────────────────────┐ 56│ 6. TRANSITION: reviewer → finalizer │ 57│ Execute finalizerNode(state) │ 58│ → Receive update {finalArticle: "..."} │ 59│ → Merge with state │ 60│ Save checkpoint │ 61└───────────────────────────────┬─────────────────────────────────┘ 62 │ 63 ▼ 64┌─────────────────────────────────────────────────────────────────┐ 65│ 7. FINALIZER: finalizer → __end__ │ 66│ The graph has completed │ 67│ Returning the final state │ 68└───────────────────────────────┬─────────────────────────────────┘ 69 │ 70 ▼ 71 return state (full)

PART 6: FAQ - Frequently Asked Questions

Why does the reducer add messages but replace the topic?

Messages represent history. We want to keep all messages throughout the process.

Topic is the current theme. When the topic changes, the old one is no longer needed.

What happens if I don’t specify a reducer?

LangGraph will use the default behavior - replacement (like for the topic).

Why is iterationCount needed?

To protect against infinite loops. If the reviewer never says "approved", the program will keep cycling between the writer and the reviewer.

Can one node directly invoke another?

No. Nodes do not know about each other. They only read/write state. LangGraph determines who executes next based on transitions.

What are start and end?

These are special virtual nodes:

__start__- where the graph begins__end__- where the graph ends

They do not execute code - they only mark the boundaries.

Can there be multiple end nodes?

Yes! For example:

typescript1.addEdge('success', '__end__') 2.addEdge('error', '__end__')

Conclusion

Congratulations! You've learned the core concepts of LangGraph. Let's recap what we covered:

| Concept | What it does |

|---|---|

| State + Annotation | Defines the data structure passed between agents |

| Reducer | Specifies how to combine new data with existing data |

| Nodes (Agents) | Functions that process the state |

| Edges (Transitions) | Rules determining the order of operations |

| Conditional Edges | Dynamic selection of the next node |

| Checkpointer | Saves the state for continuation later |

What's Next?

Now that you understand the basics, you can:

- Build your own agent system - Start with a simple two-node graph and gradually add complexity

- Experiment with different flows - Try creating graphs with multiple conditional branches

- Add persistence - Use

MemorySaveror database-backed checkpointers for production - Explore advanced features - Look into subgraphs, parallel execution, and human-in-the-loop patterns

Key Takeaways

- Think in graphs: Break down your AI workflow into discrete steps (nodes) connected by rules (edges)

- State is everything: All communication between nodes happens through the shared state

- Reducers matter: Choose the right reducer for each field - append for history, replace for current values

- Cycles enable iteration: Unlike simple chains, graphs can loop back for refinement

The article-writing example we built demonstrates a real-world pattern: research → write → review → (repeat if needed) → finalize. This same pattern applies to many AI applications: code review, content moderation, multi-step reasoning, and more.

Happy building! 🚀

Greetings!

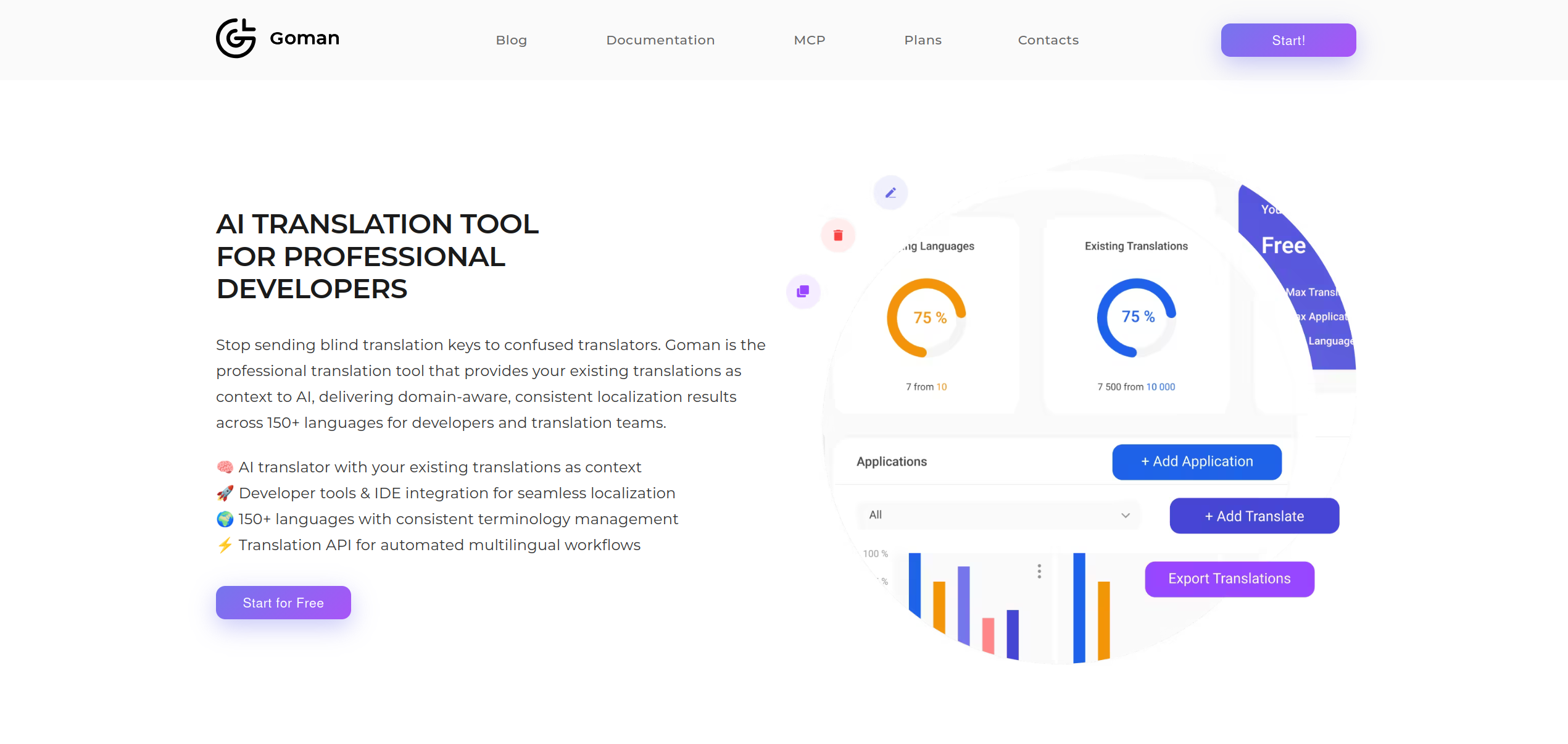

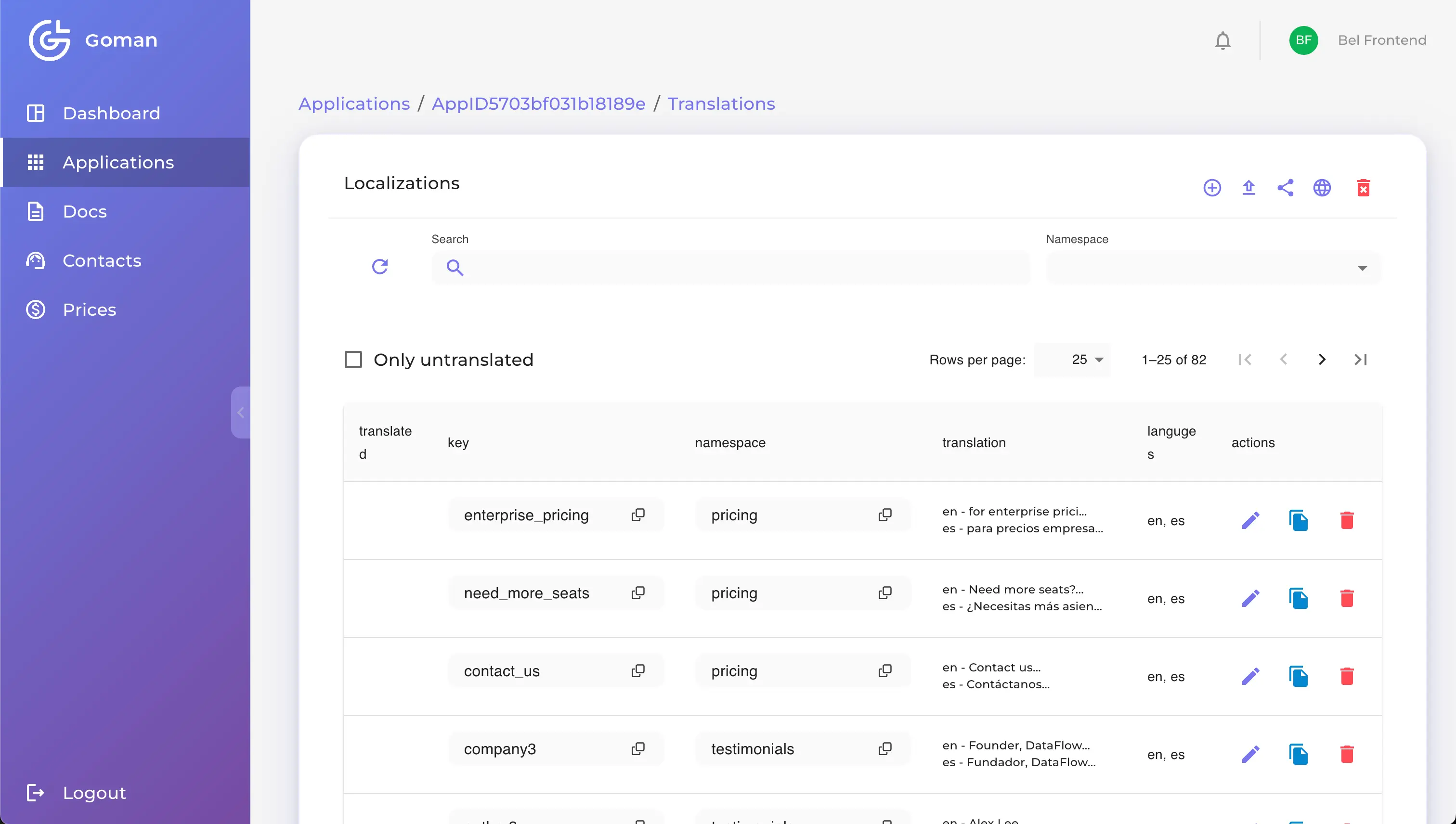

If you are tired of manually editing endless JSON files with translations and want your AI agent (Copilot, Cursor, Claude) to do it for you — this article is for you.

Today we will look at Goman.live — a tool that turns the routine of localization into magic. We will discuss why it is needed, how it saves your time, and why it is a must-have for the modern "vibe coder".

What is Goman.live and why do you need it?

Goman.live is not just another translator. It is a smart backend for managing your application's content.

Imagine: you write code, and all texts — buttons, errors, descriptions — automatically appear in all required languages, taking into account the context and style of your project. No manual copying to Google Translate, no errors in keys, and no "broken" localization files.

The service works in two modes:

- Autopilot (via MCP): You simply ask your AI agent in the IDE to "add a button", and everything happens by itself.

- Control Center (Web Editor): A powerful interface for manual tuning, quality checking, and managing complex contexts.

Who really needs this?

Goman.live was created to remove pain from the development process:

- 🚀 Vibe Coders (AI-Native Developers): You are used to working fast. Context switching to edit JSON kills the flow. Goman allows you not to leave the IDE.

- 👨💻 Pet Project and Startup Developers: Want to launch immediately in 10 languages? With Goman, it takes as much time as for one.

- 👩💼 Teams: A single source of truth for all texts. The developer doesn't wait for the translator, the translator doesn't break the JSON.

MCP: Automation of localization in IDE

This is the main feature. MCP (Model Context Protocol) allows you to connect Goman directly to your Copilot or Cursor.

How does it look in practice?

Instead of opening 5 files (en.json, de.json, es.json...), searching for the right key, and inserting text, you write in the IDE chat:

"Scan the open file and create translations for all text strings."

Or even for a whole module:

"Localize all components in the

src/features/authfolder. Save keys in theauthnamespace."

You can ask to localize the entire project at once, but for better control, it is recommended to do it in parts.

What happens under the hood:

- The agent checks if such a key exists (to avoid duplicates).

- Generates translations into all languages supported by the project.

- Saves them to the database.

- You can immediately use

t('actions.save')in the code.

It is important to note: besides the translations themselves, the system stores context for each key (this works both via MCP and via the Editor). Thus, the agent and you can always know where a specific localization is used and what it means.

This saves hours of routine work and allows you to focus on application logic, not dictionaries.

Full Set of Tools (MCP Tools)

The MCP server gives your agent full control over the localization process. The service covers the entire lifecycle of working with texts:

1. Project Discovery (Discovery)

get_active_languages: The first thing the agent does is check which languages are active in the project. This guarantees that translations will be created only for the required locales.get_namespaces: Shows the project structure (list of files/namespaces) so the agent knows exactly where to save new texts (e.g., incommonorauth).

2. Search & Verification

search_localizations: Powerful search by keys and values. The agent can find all buttons with the word "Cancel" or check how the word "Save" is translated elsewhere to maintain consistency.get_localization_exists: Precise check of a specific key. Allows avoiding duplication and overwriting of existing important texts.

3. Content Management (Management)

create_localization: The main tool. Creates or updates translations. Understands context and can add translations immediately to all active project languages in one request.delete_localization: Allows deleting obsolete or erroneous keys, maintaining cleanliness in the project.

This set of tools turns your AI agent into a full-fledged localization manager.

Ready-made Templates (Prompts) for a Quick Start

To avoid wasting time writing instructions for AI, the system already has a set of proven prompts for typical tasks. They are available exclusively via MCP and are ready for use by your agent:

init-project: Full localization setup from scratch. Helps choose the project structure, scans files, and creates the first keys.localize-component: Just insert the component code (React, Vue, etc.), and the agent will find all texts, create keys, and even show how to change the code.localize-folder: The same, but for entire folders. Ideal for mass localization.add-language: Want to add Polish? This prompt will take all existing English keys and translate them into the new language.check-coverage: Project audit. Shows which keys are missing in which languages (e.g., "5 translations missing inru").localization-audit: Deep analysis. Finds keys that are no longer used in the code and suggests deleting them.

Just choose the necessary template, and the system itself will substitute the optimal settings for a high-quality result.

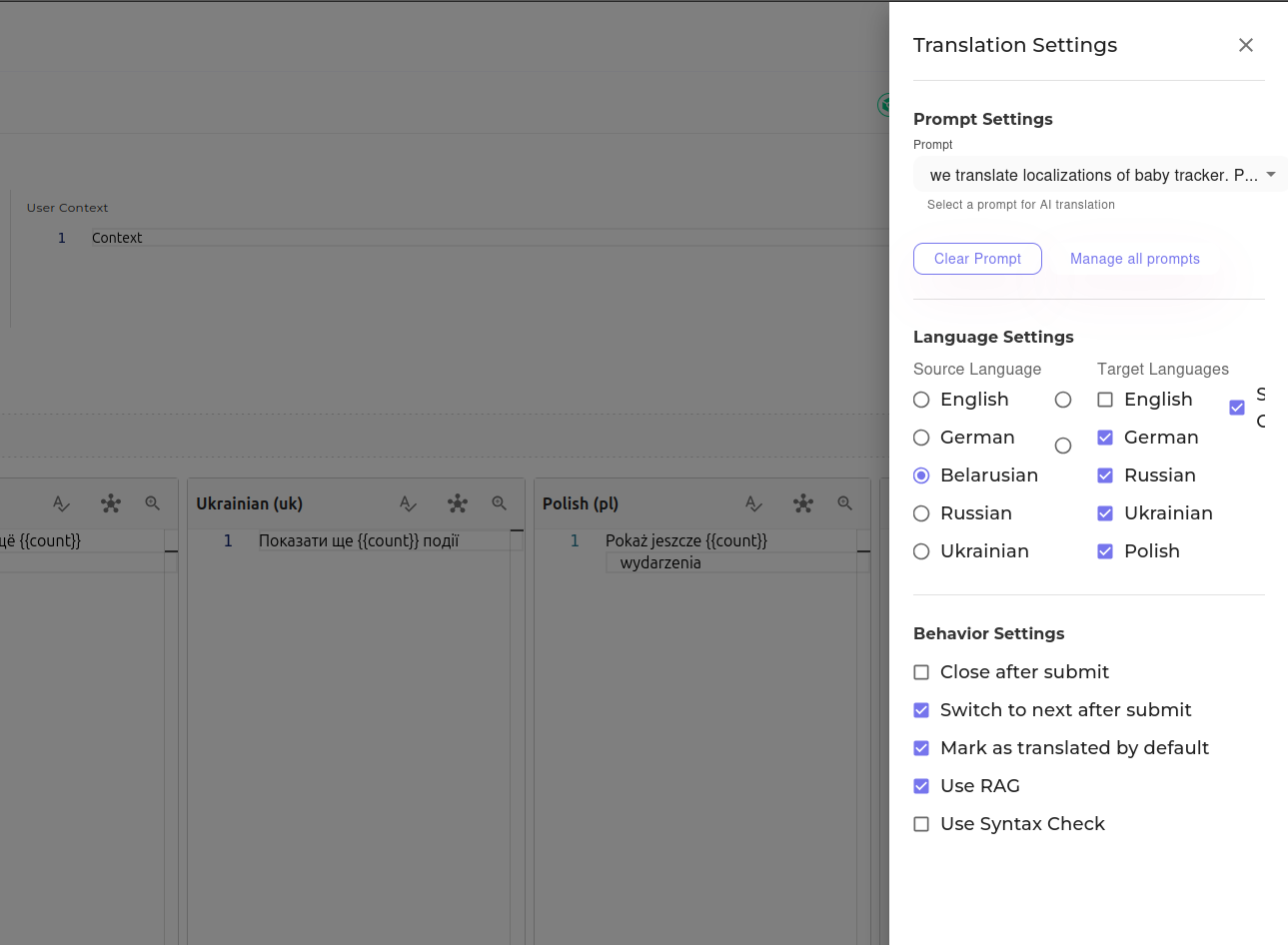

Context and Prompt Management in IDE

This function links settings in the web interface with the developer's working environment. The system provides a Prompt Editor, which allows creating specialized instructions for AI agents.

This solves the "blank slate" problem when the model needs the context of the task explained every time.

What the editor allows you to do: The main function is the creation and editing of the prompts themselves. It is through them that you manage the context in your IDE or agent. You write the rules of the game once, and the agent starts following them.

It is important to note: this works not only for translations. You can create prompts for any development tasks — from refactoring to writing tests.

Usage examples:

- For coding: "Use arrow functions, avoid

any, write comments in JSDoc format, and always cover new code with tests." - For interface: You can set a strict rule: "Translate as briefly as possible so the text fits into buttons, use Apple HIG terminology."

- For marketing: "Write emotionally, address the user as 'you', add appropriate emojis."

- For technical documentation: "Maintain an official style, do not translate specific terms (e.g., 'middleware', 'token'), formulate thoughts precisely and dryly."

The user simply selects the necessary prompt from the list in Copilot or Cursor, and the agent instantly switches to the required mode of operation.

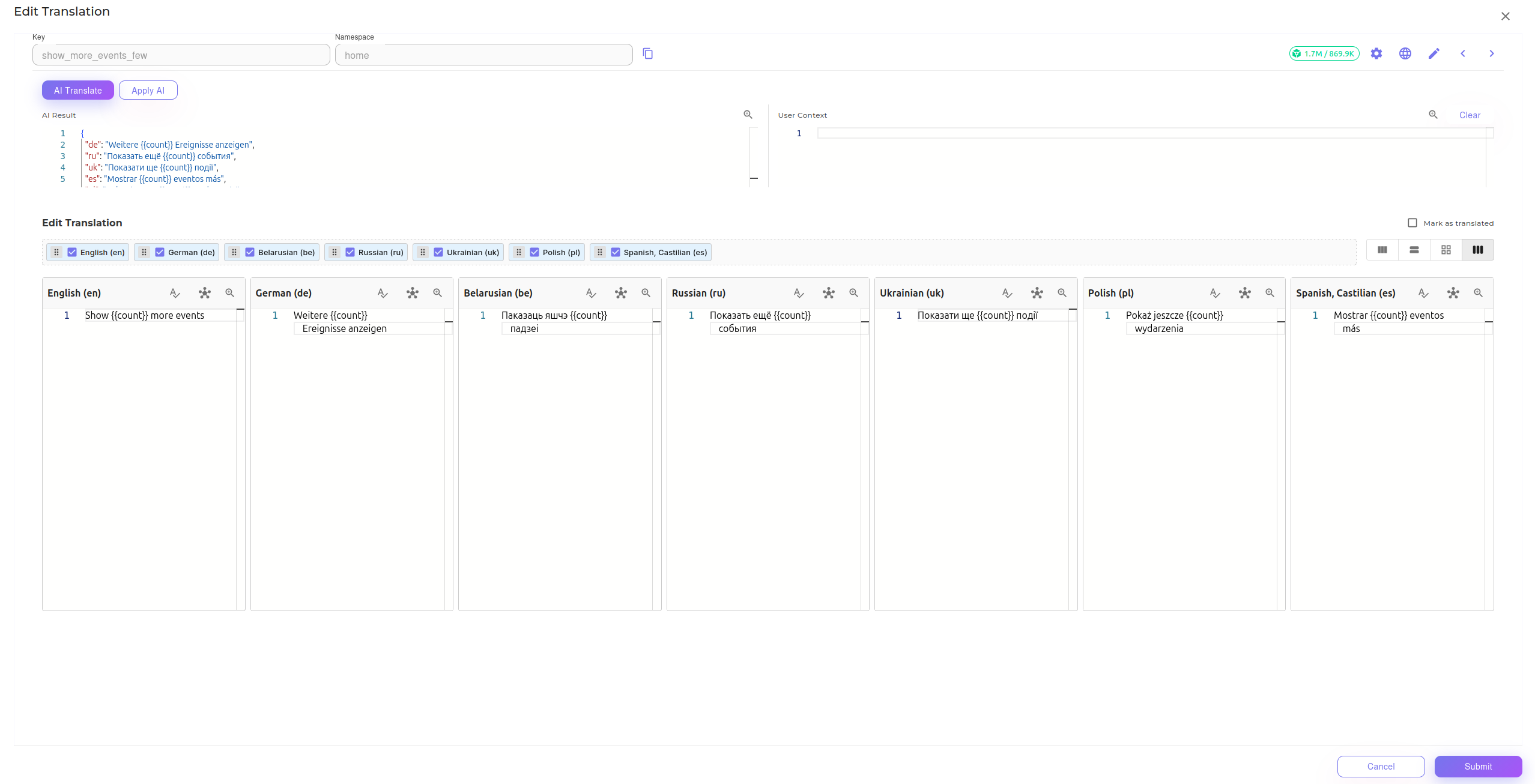

Editor: Full Control Over Quality

If MCP is speed, then the web editor is precision.

Here you can:

- See the full picture: All languages side by side.

- Edit context: Add a description for AI so it understands that "Home" is a page, not a building.

- Fix nuances: If AI slightly missed the style, you can quickly fix it manually or ask to redo the variant.

This is ideal for marketing texts, landing pages, and important messages where every word matters.

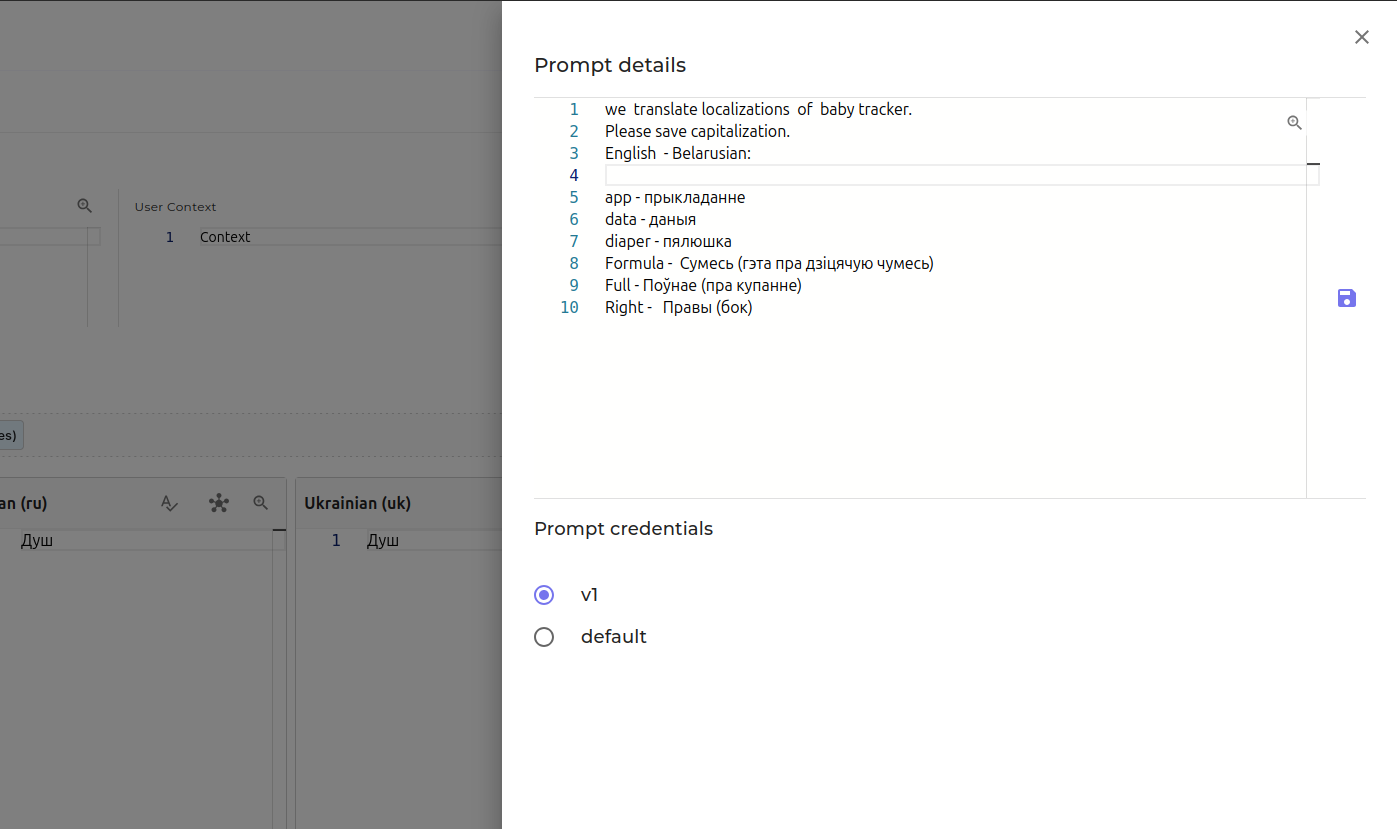

Prompts: Your Personal Style

Why do AI translations sometimes look "machine-like"? Because the model doesn't know your context. In Goman.live, you create Prompts — a set of rules for your project.

This is a replacement for outdated "glossaries". You can tell the model:

- "Address the user as 'you'"

- "Do not translate the brand name 'SuperApp'"

- "Use a cheerful, informal tone"

AI Assistant for Prompts

Don't know how to write a prompt correctly? The built-in AI chat will help formulate ideal instructions for the model so the result is exactly as you expect.

Prompt Storage and Versioning

You can store up to four (currently four) versions of a single prompt and switch between them in one click.

This is very convenient if you want to experiment with formulations or translation rules. Prompt versioning, when used properly, can significantly reduce token consumption. It can also be useful when testing and searching for the most suitable prompts - a prompt testing system has been added to the service - you can immediately run requests for execution and get results.

Collaboration

If you are not working alone - just add a colleague's email.

They will get access to your project, and you can work together on localizations and translations. Restrictions are only by tariff plan. Tokens for translation are taken from the owner's account - that is, the one who "shared".

Supported Languages

There is no full list of languages in the service - and that's good.

Models are used that can work with dozens and hundreds of languages, so there are effectively no restrictions.

Translation quality depends on the text itself, the prevalence of the language, and whether there are similar translations in your database.

Translations via the editor often turn out better than via standard tools because context and previous results are taken into account.

And if you work via MCP, the quality depends on the model your agent uses - for example, Claude, GPT, etc.

Quality Control: Trust, but Verify

AI is a powerful tool but not perfect. Therefore, Goman.live has built-in insurance mechanisms:

- Automatic Syntax Check: The service watches so AI doesn't break variables (e.g.,

{{name}}) or HTML tags. - Highlighting Suspicious Places: In the editor, you will see warnings if the translation looks uncertain.

You always have the last word. The service proposes — you approve.

Limitations and Features

Since the service runs on LLMs, it has its own features.

First of all - this is the context size: the model can process only a certain amount of information at a time (on average about 8–10 thousand tokens).

If the text is too large, it is better to divide it into logical parts - this way the model better understands the structure and context, and translations come out more accurate.

When using RAG, the context may also be incomplete because separate pieces of text are stored in it and search occurs through it.

Therefore, it is better to translate in small blocks and avoid overloading the model.

This is not a disadvantage, but rather a feature of LLM operation; they simply must "understand" the text within their context window.

But this allows achieving stable results and easily processing translations.

About Tokens and Cost

The principle of operation is honesty.

- Via MCP: You use your own API key (Copilot, Cursor, OpenAI), so Goman.live does not charge any additional fees for tokens. It is effectively free from the service side.

- Via Editor: The service's capacities are used, so internal tokens are deducted.

How to get a discount?

A referral program is active: bring a friend and get up to 90% discount. You can contact goman.live.service@gmail.com if you want to learn more.

About Data Retention

All your data is stored on our own servers.

A dump is made every 6 hours, and information is not transferred to third parties except for models (LLMs) that process translations.

Data after application deletion may be stored for some time - done for the possibility of restoring them upon personal request. You can also export localizations in the form offered in the service at any time. Uploading data is possible via MCP, via a form (one record at a time), or immediately many from a file in the required format.

About Cooperation

The project team is open to new ideas and cooperation.

If you have a service or business that can be integrated with Goman.live, or if there is interest in the project — you can contact the developers.

Creation of custom plans, API integrations, and even separate solutions for specific needs is possible.

Additional Functionality

Goman.live is not just localizations.

The service allows testing prompts, viewing results, saving them in different versions, and even working in a team. This is described in more detail in the

service documentation

What's Next

Work is underway on the following things:

- improving prompt integration with GitHub Copilot, Cursor, and other IDEs,

- choice of translation model and use of own API keys,

- improving RAG and automatic update of localizations,

- and many more ideas that will make working with translations even more convenient.

Summary: Why is it worth trying?

If you want to create global products but don't want to waste your life on spreadsheets with translations — Goman.live is for you.

- Speed: Localization becomes part of writing code, not a separate stage.

- Quality: AI knows context and style better than Google Translate.

- Convenience: Manage everything right from your favorite IDE.

👉 Try it yourself at goman.live and feel the difference. Your users will say "Thank you" (in their native language 😉).

Vibe Coding Without Pain: A Complete Practical Guide from Real Experience

Vibe coding isn't "magic in a vacuum," but a conscious technique of rapid development with a reliable framework. Over the past months, I've transitioned from Bolt.New to Copilot, Claude, Cursor, and Google AI Studio: over a thousand prompts, dozens of iterations, and many lessons. Below is not a collection of platitudes but a polished set of principles, tools, and templates that truly save time, money, and nerves as the codebase grows.

Introduction: What is Vibe-Coding and How Not to Burn Out

The idea is simple: we move in small but precise steps, committing results to Git, asking AI to make local "diff-only" patches instead of rewriting the world, and maintaining a design system from the very first lines. Instead of "an agent that does everything for you," pair with a wise assistant to whom you clearly formulate the task and boundaries. This way, we avoid "hallucinations," unnecessary restructuring, and do not lose control over tokens.

1) Planning and Mini-Contract: How Not to Get Lost in the First 30 Minutes

Before any coding, create a brief but concrete plan:

- 1–2 sentences about what we are doing: "The user does X and gets Y" (example: "Creates and shares task lists in 30 seconds without registration"). - 3 to 7 main flows/screens - without excessive detail.

- Framework in terms of files: "routes -> modules -> reusable components."

- Blacklist items: "do not reinvent the wheel" such as buttons, inputs, alerts, helpers, validation schemas.

Then, a feature mini-contract: inputs, outputs, errors, constraints, criteria for completion. Afterwards, all prompts are within the scope of this contract.

2) Design System and Consistency: The Framework for the Project

The most costly mistake is starting "on a vibe" without a grid and basic typography. To avoid turning refactoring into manual alignment of margins:

- One base file: breakpoints, spacing scale, grid, typography.

- Main components right away: Button, Input, Select, Alert, Loader, Empty/Error states.

- Check after each iteration: ensure sizes and fonts haven't "spread out."

- In prompts: "Use existing ButtonX, InputY." New styles should not be introduced unnecessarily.

Resource for starting and inspiration: 21st.dev - ready-made UI patterns with prompts.

3) A Stack that Helps, Not Hinders

The broader the community and documentation, the more accurately AI hits the API and patterns. Practical combinations:

- Next.js - frontend + lightweight API layer.

- Tailwind CSS - fast, consistent styles without "CSS-mess" at the start.

- Fastify + MongoDB or Supabase - minimal rituals, many ready-made recipes.

The main thing is not exotic, but reliable model answers.

4) Git Discipline: "Freeze Time" More Often

Your best insurance policy:

- One feature per branch.

- Small work chunks - frequent commits with clear messages.

- Before risky generations/reorganizations - commit or branch.

This way, you won't have to "roll back" 700 lines if the AI "optimized" in the wrong place.

5) Breaking Down the Complex: Spec -> Skeleton -> Flesh -> Harden

Not "do the whole module," but a sequential assembly line:

- Routes and file skeletons. 2. Basic markup and components.

- Logic, validation, states.

- Tests, security, optimization.

AI works more stably when the task boundaries are narrow and clear.

6. Prompts without "magic" and with protective boundaries

The final formulation is your control lever. The minimal template:

"Refactor ProfileForm.tsx: move validation to a separate hook, do not touch the API, preserve prop names and public API, use InputX and ButtonY." The protective phrase "Do not change anything that was not requested" is mandatory.

7) Context and "Reset": When to Open a New Chat

A large chat = drift of patterns, loss of context. Restarting is not a failure, but a form of hygiene:

- Brief introduction for a new window: "Feature X, files A/B/C, only allowed to touch …"

- Remember: too much context is as harmful as too little - leave only the essential.

8) Token Economy: Be cautious with the budget

- Small patches instead of giant "rewrites."

- Diff-first: "Show the patch plan + diff, then apply."

- Model switching: simple - Auto/smaller model; reviews and security - Gemini 2.5 Pro / Sonnet.

9) Engineering Hygiene: Tests, Logs, Types

- Small, clean functions without "lazy" side effects.

- Use ESLint with autofixes; remove dead code at every step.

- Minimum unit tests: "happy path" and edge cases (null/empty/unknown type).

- TypeScript with strict rules - fewer comments, more guarantees.

Before committing: remove unnecessary logs and "temporary" comments - they consume attention during iterations and use up tokens.

10) Security: A Brief but Serious Checklist

- Secrets and keys should only be stored on the server (use environment variables, .gitignore, and ensure they are not exposed to the client).

- Validate and sanitize inputs on the server, and ensure output is properly escaped.

- Permissions should not rely solely on login: each action and resource must undergo permission checks.

- Errors: provide a general message for the user and maintain a detailed log for the developer.

- IDOR and resource ownership: ensure the user has the right to access the specific ID. - DB rules (RLS and analogs) are better at the database level when appropriate.

- Rate limiting and encryption (HTTPS, data "at rest").

11) Iterative Review with a "Big Head" (Google AI Studio / Gemini 2.5 Pro)

When deep inspection is needed:

- Brief overview: "The module does X for Y; main logic in functions..."

- Goals: security, performance, duplicates, unnecessary dependencies.

- Boundaries: "No global restructuring; suggest local patches/steps."

Response Format that Works:

- Issue;

- Why it is an issue;

- Risk;

- Specific patch/steps.

Then proceed with local diff patches, small tests, and a review.

12) "Embedded" Errors: Procedure Without Panic

If, after 2-3 attempts, things are still "off":

- Ask the model to name the top suspects in the dependency chain.

- Add logs to narrow spots, add facts (stack, payload, boundaries).

- If necessary, rollback to a "green" commit and take small steps forward.

13) The Rule "Don't Touch Unless Asked" - and Why I Always Repeat It

AI loves to "tidy up" when it sees an opportunity. Protection - the concluding phrase in each prompt: "Don't change anything beyond the listed." After several iterations, the model begins to respect boundaries.

14) "Common AI Mistakes" File and "Instructions" as Code

- Maintain

ai_common_mistakes.md: AI likes to move validation to UI, change prop names, and delete necessary imports - all of this goes here. - Folderinstructions/with markdown examples, prompt templates, "Cursor Rules," and short best practices. - In the new feature, add a link to the file of mistakes - saves tokens and time.

15) Tools and Patterns That Accelerate Without Fuss

Tools:

- Storybook - isolated UI and documentation of patterns.

- Playwright / Vitest - fast E2E/unit tests; work well with diff patches.

- CodeSandbox / StackBlitz - instant sandboxes for PoC. - Sourcegraph Cody: deep search and contextual patches.

- Continue / Aider / Windsurf / Codeium: lightweight assistants for "getting stuck."

- Tabby / local LLM: affordable generation for template code.

- Perplexity / Phind: quick technical research and approach comparison.

- MCP (Model Context Protocol): standardized access to files/commands without "chatter" in the prompt.

- Commit message generators (see also CommitGPT): save time, but read before pushing. - ESLint with autofix: "entry-level" machine hygiene.

Work Patterns:

- Triple Pass Review: structure -> logic/data flow -> edge cases/security.

- Interface Freeze: lock the public API/props before deep generations.

- Context Ledger: short notes on features (files, solutions, outstanding TODOs) - easily transferable to a new chat.

- Session Reset Cadence: regular reset of long sessions.

- Red Team Self-Check: separate pass for injections, IDOR, races.

16) About Cursor Rules, Instructions, and "Not Being Afraid to Go Back"

- Cursor Rules: An excellent starting point; fix the stack, patterns, prohibitions, and anti-patterns.

- A folder with instructions: examples of components, short recipes for typical tasks (replacing the "hot memory" of the chat).

- If the model goes astray: go back one step, clarify the prompt and context—continuing "by inertia" is more costly.

17) Preventing Undesirable Changes from AI - Politely but Firmly

Be persistent in every request: "Do not add, delete, or rename anything that was not requested." This significantly reduces the "optimization reflex." Vulgar language is unnecessary - clear boundaries work better.

18) Mini-checklist before Commit

- The function fits into existing patterns and components.

- Types are strict, without any; basic tests and logs are present.

- Security: Secrets are on the server; permissions and validation are checked. - UI is consistent: spacing, states, names.

- Commit message is short and informative.

Useful External Resources

Below are external, public sources (services and tools) that provide practical value in the "vibe" approach.

bolt.new

What: An online environment for quickly creating a framework (Full-stack/frontend) with initial code generation through AI.

Why: Instant MVP/prototype before investing in the structure of the main repository. When: In the phase of idea validation or searching for a general form without detailed architecture.

GitHub Copilot

What: A tool for autocomplete and inline suggestions in the editor (VS Code, JetBrains).

Why: To speed up template code (configurations, helper functions, small React components) and reduce the amount of manual typing.

When: When a quick "sketch" or line completion is needed, rather than deep multi-file logic generation. What: Models with strong contextual understanding and cautious outputs.

Why: Review of large code blocks, suggestions on structure, security, and style.

When: Before refactoring, to find duplications or potential vulnerabilities.

Cursor

What: IDE (fork of VS Code) with deep integration of LLM (chat + patch generation + "Rules"). Why: Managed prompts, local diff-patches, quick iterations without manual copying.

When: In main development, when many small structural changes are needed.

cursor.directory

What: A catalog of ready-made Cursor Rules and prompt templates.

Why: A starter set of rules (style, architecture, protective constraints) instead of manual development.

When: At the stage of setting up "rules of the game" in the project. Google AI Studio (Gemini 2.5 Pro)

What: An interface to models with a large context window.

Why: Comprehensive checks - security, performance, duplications, dependencies; summarization before restructuring.

When: When the codebase is already significant, and a "strategic overview" is needed.

21st.dev

What: A collection of UI patterns with example prompts.

Why: To unify styles and component structures without inventing "from scratch." When: before scaling the frontend or standardizing forms/lists.

Sourcegraph Cody

What: Intelligent search across large repositories and patch generation.

Why: To find all function usages/dependencies and build a picture of connections before making changes.

When: before deep refactoring or module removal.

CodeSandbox

What: Cloud sandboxes for instant application launch without local installation. Why: To test a library, evaluate an idea, or demonstrate a concept to a colleague.

When: Early validation or isolated demonstration.

Storybook

What: An isolated environment for viewing and testing UI components.

Why: To ensure the consistency of the design system and visually check states (loading/empty/error).

When: During the extraction of common components or before scaling the front end. Playwright

What: A tool for E2E tests (browser scenarios with high accuracy).

Why: To check key user flows after automatic patches by AI.

When: Before merging important changes or releasing a version.

Vitest

What: A fast unit/integration tester for the Vite ecosystem/modern TS.

Why: To cover pure functions and hooks to stabilize refactoring. When: Right after extracting small utilities or logic into separate modules.

Perplexity

What: LLM search engines with summarized answers and links.

Why: Instantly learn API nuances, library statuses, and compare approaches.

When: Before choosing a stack or optimization.

LangSmith

What: Tools for assessing the quality of LLM integrations and prompts.

Why: Objectively evaluate if the result improves with your rules or corrections. When: when the number of prompts and models is greater than 1, and quality management is required.

Regex101

What: An online debugger for regular expressions with explanations.

Why: To quickly verify and correctly construct validation/parsing rules.

When: Before implementing complex validation in a form/API.

How to effectively combine:

- Idea -> bolt.new (prototype) -> transfer to Cursor.

- Structure -> Sourcegraph (dependencies) -> Claude (comments) -> local patches.

UI

- 21st.dev (pattern) + Storybook (review) + Tailwind (styles).

Security

- OWASP (checklist) + Gemini (audit) + Playwright (flows).

Cleanliness

- ESLint (rules) + TypeScript (strict types) + Vitest (tests).

Gradually introduce resources—not all at once: this reduces cognitive load and increases process stability.

Conclusion: Speed with a Framework.

Vibe coding is not chaos or an "end in itself." It is a cycle of "vision -> small step -> check -> stabilization." The better the vision and patterns, the more AI becomes a partner rather than a source of surprises and problems. Mistakes will occur, but with this set, you won't get lost—just continue to iterate without pain.

Thank You for Your Attention

Thank you for reading. I hope the material will be useful to you. If you liked it, please support our project.

P.S.: The image in the header is intentionally a bit quirky.

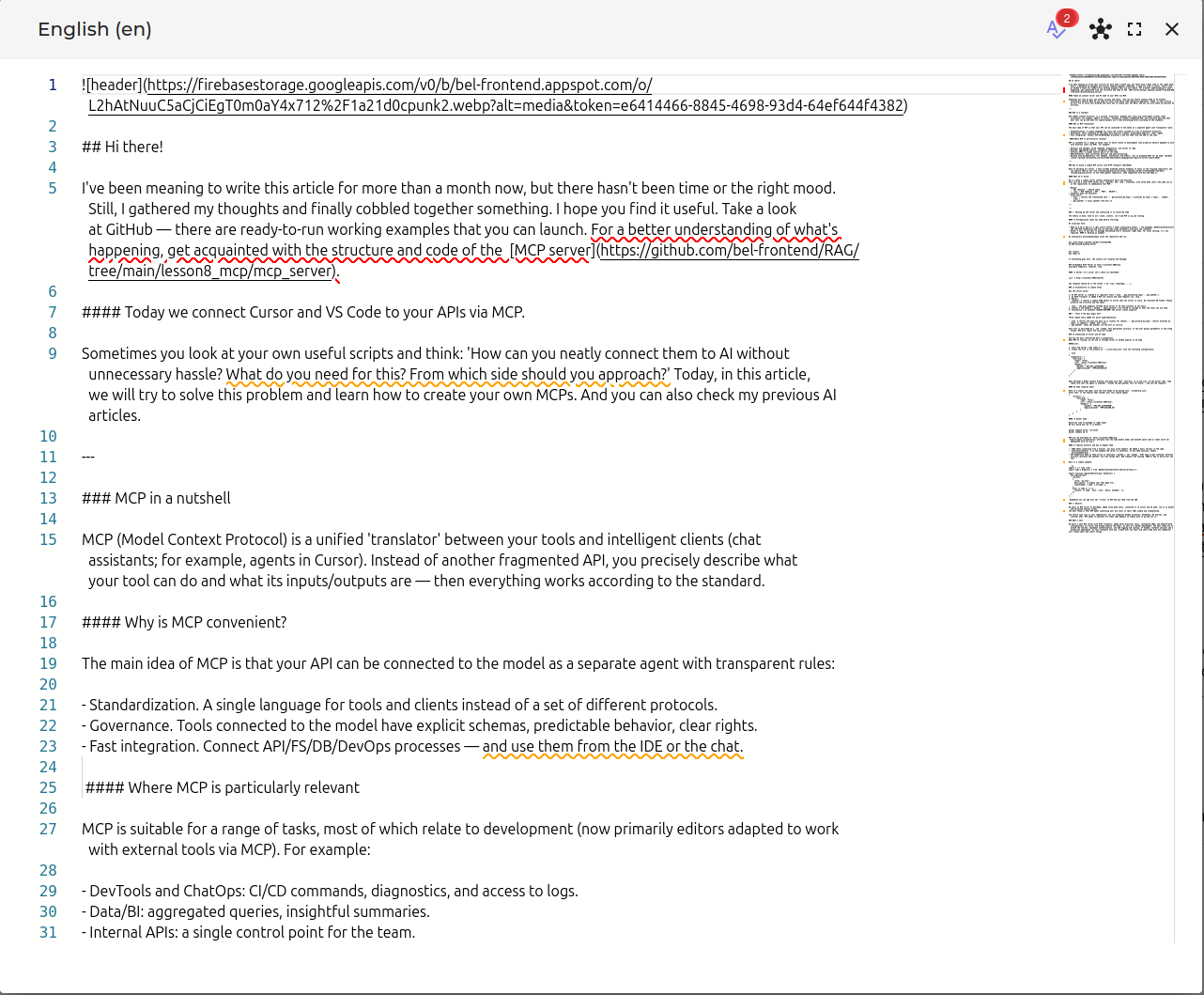

Hi there!

I've been meaning to write this article for more than a month now, but there hasn't been time or the right mood. Still, I gathered my thoughts and finally cobbled together something. I hope you find it useful. Take a look at GitHub — there are ready-to-run working examples that you can launch. For a better understanding of what's happening, get acquainted with the structure and code of the MCP server.

Today we connect Cursor and VS Code to your APIs via MCP.

Sometimes you look at your own useful scripts and think: 'How can you neatly connect them to AI without unnecessary hassle? What do you need for this? From which side should you approach?' Today, in this article, we will try to solve this problem and learn how to create your own MCPs. And you can also check my previous AI articles.

MCP in a nutshell

MCP (Model Context Protocol) is a unified 'translator' between your tools and intelligent clients (chat assistants; for example, agents in Cursor). Instead of another fragmented API, you precisely describe what your tool can do and what its inputs/outputs are — then everything works according to the standard.

Why is MCP convenient?

The main idea of MCP is that your API can be connected to the model as a separate agent with transparent rules:

- Standardization. A single language for tools and clients instead of a set of different protocols.

- Governance. Tools connected to the model have explicit schemas, predictable behavior, clear rights.

- Fast integration. Connect API/FS/DB/DevOps processes — and use them from the IDE or the chat.

Where MCP is particularly relevant

MCP is suitable for a range of tasks, most of which relate to development (now primarily editors adapted to work with external tools via MCP). For example:

- DevTools and ChatOps: CI/CD commands, diagnostics, and access to logs.

- Data/BI: aggregated queries, insightful summaries.

- Internal APIs: a single control point for the team.

- RAG/automation: data collection and pre- and post-processing.

- Working with documentation (for example, Confluence) and others. List of proposed MCPs for VS Code: GitHub

How to build a simple MCP server with HTTP transport (Bun/Node)

Back to building our server. I have already prepared several examples of tools in the training repository; you can explore them at the link lesson8_mcp/mcp_server in the bel_geek repository. Code compatible with Bun and Node.js.

What we'll build

We'll create a simple server without unnecessary bells and whistles.

This will be a local HTTP server with /healthz and /mcp, stateless, with three demo tools (the same set as in the repository) to immediately test MCP:

- Routes:

GET /healthz- health check./mcp- MCP endpoint (GET,POST,DELETE).

- Stateless mode (no sessions).

- Three tools:

echo- returns the transmitted text. -get_proverb_by_topic— proverbs by topic (topic,random,limit)get_weather— local weather from wttr.in.

🚀 Setting up the server and connecting it to Cursor/VS Code

The theory is done; time to act: clone, install, run — and MCP is up and running.

🔑 Prerequisites (what you need before starting)

No surprises here:

- Node.js ≥ 18 or Bun ≥ 1.x (Bun starts faster — fewer unnecessary moves). - Two packages: @modelcontextprotocol/sdk (the MCP foundation) and zod (to describe input parameters precisely and reliably).

- Docker — only if you want to package everything into a container right away. For local testing, it's not required.

⚡️ Running an example

No unnecessary philosophy—simply clone the repository and run:

text1git clone https://github.com/bel-frontend/RAG 2cd RAG/lesson8_mcp/mcp_server

text1bun install 2bun index.ts

If everything goes well, the console will display the message:

text1MCP Streamable HTTP Server on http://localhost:3002/mcp 2Available endpoints: /healthz, /mcp

🎉 Server! It's alive! Let's check its heartbeat:

text1curl -s http://localhost:3002/healthz

The response should be in the format { ok: true, timestamp: ... }.

🧩 Architecture in simple terms

How the server works:

- An MCP server is created — it registers tools (

echo,get_proverb_by_topic,get_weather). - An HTTP transport is added — MCP can receive and send requests via

/mcp. - Routes:

/healthz— returns a simple JSON object to verify that the server is alive./mcp- the main endpoint through which Cursor or VS Code connects to the tools.

- Context — the headers (

apikey,applicationid) are stored in storage so that the tools can use them. - Termination — on shutdown (SIGINT/SIGTERM) the server closes properly.

Key Implementation Details

The server architecture consists of three main components:

1. MCP Server & Transport

typescript1const mcp = new McpServer({ name: 'test-mcp', version: '0.1.0' }); 2const transport = new StreamableHTTPServerTransport({ 3 sessionIdGenerator: undefined // Stateless mode 4}); 5mcp.connect(transport);

2. HTTP Routing

Two endpoints handle all traffic:

/healthz- health checks/mcp- MCP protocol communication (POST for tool calls, GET/DELETE for sessions)

3. CORS & Error Handling

The server includes proper CORS headers and graceful shutdown on SIGINT/SIGTERM signals.

🛠 Tools — the main magic here

Three simple tools demonstrate MCP capabilities:

1. Echo Tool - Returns input text (useful for testing)

typescript1mcp.registerTool('echo', { 2 title: 'Echo', 3 description: 'Return the same text', 4 inputSchema: { text: z.string() } 5}, async ({ text }) => ({ 6 content: [{ type: 'text', text }] 7}));

2. Proverbs Tool - Fetches Belarusian proverbs with filtering

typescript1mcp.registerTool('get_proverb_by_topic', { 2 inputSchema: { 3 topic: z.string().optional(), 4 random: z.boolean().optional(), 5 limit: z.number().int().positive().max(200).optional() 6 } 7}, async ({ topic, random, limit }) => { 8 const data = await fetch(PROVERBS_URL).then(r => r.json()); 9 let items = data.map(d => d.message); 10 11 // Filter by topic 12 if (topic) { 13 items = items.filter(m => m.toLowerCase().includes(topic.toLowerCase())); 14 } 15 16 // Random selection with Fisher-Yates shuffle 17 if (random) { 18 // ... shuffle logic 19 } 20 21 return { content: [{ type: 'text', text: items.join('\n') }] }; 22});

3. Weather Tool - Shows current weather via wttr.in

typescript1mcp.registerTool('get_weather', { 2 inputSchema: { city: z.string() } 3}, async ({ city }) => { 4 const weather = await fetch(`https://wttr.in/${city}?format=3`).then(r => r.text()); 5 return { content: [{ type: 'text', text: weather }] }; 6});

Key Points:

- Use Zod schemas for input validation

- Return format:

{ content: [{ type: 'text', text: string }] } - Handle errors gracefully with try-catch

- Add timeout handling for external APIs

🖇 Connecting to Cursor and VS Code

And now the most interesting part — integration. When MCP is running, we can use it through Cursor or GitHub Copilot in VS Code.

Cursor:

- Start the server (

bun index.ts). - Create the file in the project at

./.cursor/mcp.jsonwith the following configuration:

json1{ 2 "mcpServers": { 3 "test-mcp": { 4 "type": "http", 5 "url": "http://localhost:3002/mcp", 6 "headers": { 7 "apiKey": "API_KEY_1234567890", 8 "applicationId": "APPLICATION_ID" 9 } 10 } 11 } 12}

Open Settings → Model Context Protocol and make sure that test-mcp is in the list. In the Cursor chat, type (make sure that the agent is enabled): "Invoke the get_weather tool for Minsk" - and see the response.

VS Code (Copilot Chat)

Here it's almost the same, only the file needs to be placed into .vscode/mcp.json.

After that, in the Copilot Chat toolbar your tool should appear.

json1{ 2 "servers": { 3 "test-mcp": { 4 "type": "http", 5 "url": "http://localhost:3002/mcp", 6 "headers": { 7 "apiKey": "API_KEY_1234567890", 8 "applicationId": "APPLICATION_ID" 9 } 10 } 11 } 12}

Pro tip: You can configure multiple MCP servers or use environment variables for credentials.

🐳 Docker Deployment - Production Ready

Quick Start:

Would you like to package it right away? Build and run it in Docker:

bash1docker compose build --no-cache 2docker compose up -d

MCP will be available at http://localhost:3002/mcp.

Understanding the Docker Setup

The repository includes a complete Docker configuration:

Dockerfile Example:

dockerfile1FROM oven/bun:1 as base 2WORKDIR /app 3 4COPY package.json bun.lockb ./ 5RUN bun install --frozen-lockfile --production 6 7COPY . . 8 9EXPOSE 3002 10 11HEALTHCHECK CMD \ 12 curl -f http://localhost:3002/healthz || exit 1 13 14CMD ["bun", "index.ts"]

docker-compose.yml:

yaml1version: '3.8' 2 3services: 4 mcp-server: 5 build: . 6 container_name: mcp-server 7 ports: 8 - "3002:3002" 9 environment: 10 - NODE_ENV=production 11 restart: unless-stopped

Useful Docker Commands:

bash1# View logs 2docker compose logs -f mcp-server 3 4# Restart service 5docker compose restart 6 7# Stop and remove 8docker compose down 9 10# Rebuild and restart 11docker compose up -d --build

Team-friendly collaboration: everyone uses the same mental model and doesn't waste time on "what works for me—doesn't work for you."

🤔 Typical pitfalls and how to bypass them

CORS—when connecting from a browser, you must allow headers. We added a basic variant in the code.Stateless/Stateful— in the example the server is stateless. If you need sessions, enablesessionIdGenerator.- API headers—in Node.js they arrive in lowercase (

apikey), notapiKey. It’s easy to get confused. External services (proverbs and weather) can slow things down. Add timeouts and caching.

✍️ How to build your own tool

Here is a simple example:

js1import { z } from 'zod'; 2import type { McpServer } from '@modelcontextprotocol/sdk/server/mcp.js'; 3 4export function registerMyTools(mcp: McpServer) { 5 mcp.registerTool( 6 'my_tool', 7 { 8 title: 'my_tool', 9 description: 'A simple tool that adds 2+2', 10 inputSchema: { name: z.string() }, 11 }, 12 async ({ name }) => ({ 13 content: [{ type: 'text', text: `Hello, ${name}!` }], 14 }) 15 ); 16}

Ready—now you can add your own 'tricks' to MCP and use them from the IDE.

✍️ Building Custom Tools

Here's a practical example of a database query tool:

typescript1import { z } from 'zod'; 2import { Pool } from 'pg'; 3 4const pool = new Pool({ /* config */ }); 5 6export function registerDatabaseTools(mcp: McpServer) { 7 mcp.registerTool('query_users', { 8 description: 'Search users by name or email', 9 inputSchema: { 10 searchTerm: z.string().min(2), 11 limit: z.number().int().max(100).default(10) 12 } 13 }, async ({ searchTerm, limit }) => { 14 const result = await pool.query( 15 'SELECT id, name, email FROM users WHERE name ILIKE $1 LIMIT $2', 16 [`%${searchTerm}%`, limit] 17 ); 18 19 return { 20 content: [{ 21 type: 'text', 22 text: result.rows.map(r => `${r.name} <${r.email}>`).join('\n') 23 }] 24 }; 25 }); 26}

Best Practices:

- Always validate inputs with Zod

- Use parameterized queries to prevent SQL injection

- Handle errors gracefully and return useful messages

- Add timeouts for external API calls

- Keep tool descriptions clear and concise

🎯 Results

We built an MCP server on Bun/Node, added three demo tools, connected it to Cursor and VS Code, ran it in Docker and discussed typical issues. The main thing is that MCP makes connecting your own tools to smart IDEs simple and standardized.

The future lies only in your imagination: you can integrate DevOps processes, databases, BI queries, and internal APIs. MCP makes it possible for other team members to simply pick it up and use it.

What's next

We built a simple MCP server with HTTP transport, added three practical tools, configured CORS, and demonstrated configurations for Cursor and GitHub Copilot (VS Code), as well as a Docker deployment. The next steps are to extend the toolset, implement authentication, and add logging and caching. If needed, stateful sessions and a production deployment. If this resonates with you, create your own tools and share them with the community! Will create smart and useful things.

Greetings! Today, I’m sharing a short guide on how to set up a project to work with GitHub Copilot.

Greetings! Today, I’m sharing a short guide on how to set up a project to work with GitHub Copilot.

Reliable AI workflow with GitHub Copilot: complete guide with examples (2025)

This guide shows how to build predictable and repeatable AI processes (workflows) in your repository and IDE/CLI using agentic primitives and context engineering. Here you will find the file structure, ready-made templates, security rules, and commands.

⚠️ Note: the functionality of prompt files and agent mode in IDE/CLI may change - adapt the guide to the specific versions of Copilot and VS Code you use.

1) Overview: what the workflow consists of

The main goal is to break the agent's work into transparent steps and make them controllable. For this there are the following tools:

- Custom Instructions (

.github/copilot-instructions.md) - global project rules (how to build, how to test, code style, PR policies). - Path-specific Instructions (

.github/instructions/*.instructions.md) - domain rules targeted viaapplyTo(glob patterns). - Chat Modes (

.github/chatmodes/*.chatmode.md) - specialized chat modes (for example, Plan/Frontend/DBA) with fixed tools and model. - Prompt Files (

.github/prompts/*.prompt.md) - reusable scenarios/"programs" for typical tasks (reviews, refactoring, generation). - Context helpers (

docs/*.spec.md,docs/*.context.md,docs/*.memory.md) - specifications, references, and project memory for precise context. - MCP servers (

.vscode/mcp.jsonor via UI) - tools and external resources the agent can use.

2) Project file structure

The following structure corresponds to the tools described above and helps to compose a full workflow for agents.

text1.github/ 2 copilot-instructions.md 3 instructions/ 4 backend.instructions.md 5 frontend.instructions.md 6 actions.instructions.md 7 prompts/ 8 implement-from-spec.prompt.md 9 security-review.prompt.md 10 refactor-slice.prompt.md 11 test-gen.prompt.md 12 chatmodes/ 13 plan.chatmode.md 14 frontend.chatmode.md 15.vscode/ 16 mcp.json 17docs/ 18 feature.spec.md 19 project.context.md 20 project.memory.md

3) Files and their purpose - technical explanation

Now let's review each tool separately and its role. Below is how it’s arranged under the hood: what these files are, why they exist, how they affect the agent's understanding of the task, and in what order they are merged/overridden. The code examples below match the specification.

| File/folder | What it is | Why | Where it applies |

|---|---|---|---|

.github/copilot-instructions.md | Global project rules | Consistent standards for all responses | Entire repository |

.github/instructions/*.instructions.md | Targeted instructions for specific paths | Different rules for frontend/backend/CI | Only for files matching the applyTo |

.github/chatmodes/*.chatmode.md | A set of rules + allowed tools for a chat mode | Separate work phases (plan/refactor/DBA) | When that chat mode is selected |

.github/prompts/*.prompt.md | Task "scenarios" (workflow) | Re-run typical processes | When invoked via /name or CLI |

docs/*.spec.md | Specifications | Precise problem statements | When you @-mention them in dialogue |

docs/*.context.md | Stable references | Reduce "noise" in chats | By link/@-mention |

docs/*.memory.md | Project memory | Record decisions to avoid repeats | By link/@-mention |

.vscode/mcp.json | MCP servers configuration | Access to GitHub/other tools | For this workspace |

Merge order of rules and settings: Prompt frontmatter → Chat mode → Repo/Path instructions → Defaults.

And now let's review each tool separately.

3.1. Global rules - .github/copilot-instructions.md

What it is: A Markdown file with short, verifiable rules: how to build, how to test, code style, and PR policies.

Why: So that all responses rely on a single set of standards (no duplication in each prompt).

How it works: The file automatically becomes part of the system context for all questions within the repository. No applyTo (more on that later) - it applies everywhere.

Minimal example:

md1# Repository coding standards 2- Build: `npm ci && npm run build` 3- Tests: `npm run test` (coverage ≥ 80%) 4- Lint/Typecheck: `npm run lint && npm run typecheck` 5- Commits: Conventional Commits; keep PRs small and focused 6- Docs: update `CHANGELOG.md` in every release PR

Tips.

- Keep points short.

- Avoid generic phrases.

- Include only what can affect the outcome (build/test/lint/type/PR policy).

3.2. Path-specific instructions - .github/instructions/*.instructions.md

What it is: Modular rules with YAML frontmatter applyTo - glob patterns of files for which they are included.

Why: To differentiate standards for different areas (frontend/backend/CI). Allows controlling context based on the type of task.

How it works: When processing a task, Copilot finds all *.instructions.md whose applyTo matches the current context (files you are discussing/editing). Matching rules are added to the global ones.

Example:

md1--- 2applyTo: "apps/web/**/*.{ts,tsx},packages/ui/**/*.{ts,tsx}" 3--- 4- React: function components and hooks 5- State: Zustand; data fetching with TanStack Query 6- Styling: Tailwind CSS; avoid inline styles except dynamic cases 7- Testing: Vitest + Testing Library; avoid unstable snapshots

Note.

- Avoid duplicating existing global rules.

- Ensure the glob actually targets the intended paths.

3.3. Chat modes - .github/chatmodes/*.chatmode.md

What it is: Config files that set the agent’s operational mode for a dialogue: a short description, the model (if needed) and a list of allowed tools.

Why: To separate work phases (planning/frontend/DBA/security) and restrict tools in each phase. This makes outcomes more predictable.

File structure:

md1--- 2description: "Plan - analyze code/specs and propose a plan; read-only tools" 3model: GPT-4o 4tools: 5 - "search/codebase" 6--- 7In this mode: 8- Produce a structured plan with risks and unknowns 9- Do not edit files; output a concise task list instead

How it works:

- The chat mode applies to the current chat in the IDE.

- If you activate a prompt file, its frontmatter takes precedence over the chat mode (it can change the model and narrow

tools). - Effective allowed tools: chat mode tools, limited by prompt tools and CLI

--allow/--denyflags.

Management and switching:

-

In the IDE (VS Code):

- Open the Copilot Chat panel.

- In the top bar, choose the desired chat mode from the dropdown (the list is built from

.github/chatmodes/*.chatmode.md+ built-in modes). - The mode applies only to this thread. To change - select another or create a new thread with the desired mode.

- Check the active mode in the header/panel of the conversation; the References will show the